Two weeks ago, I actually infiltrated the local Test meetup, which was entertaining, not just for the Bath Gem Ale which JustEat has on tap in their meeting area, but because I sit there, beer in hand, while speakers covered Applied Exploratory Testing, what its like turning up as a QA team to a new company and the patterns-of-untestability that you encounter (and how to start to get things under control).

This week, I took a break from worrying about the semantics of flush() and its impact of durability of timeline 1.5 event histories. (i.e. why my incomplete apps aren't showing up in an ATS-backed Spark History server if file:// is the intermediate FS of the test run). Wandered down to the Watershed Cinema with Tom White and a light hangover related to Tom White's overnight stay including an evening visit the Bravas Tapas Bar —and into a one-day dev conference, Voxxdev Bristol 2016.

It was a good day. Oracle have been putting a lot of effort into the conf as a way raising visibility of what's going on in tech in the area to make more people aware the west of england a more interesting place to be than London, and with other companies and one of the local universities had put together a day-long conference.

I was one of the speakers; I'd put in my Household Infosec talk, but the organisers wanted something more code-related, and opted for Hadoop and Kerberos, the Madness Beyond The Gate. I don't think that was the right talk for the audience. It's really for people writing code to run inside a Hadoop cluster, and to explain to the QE and support people that the reason they suffer so much is those developers aren't testing on secure clusters. (that's the version I gave at Cloudera Palo Alto last month). When you are part way into a talk and you realise that you can't assume the audience knows how HDFS works then you shouldn't really be talking to them about retrieving block-tokens from the NN from a YARN app handed off a delegation token in the launch context by a client authed against the KDC. Normally I like a large fraction of the audience to come out of a talk feeling they'd benefited; this time I'm not sure.

I felt a bit let down the oracle big data talk, though impressed that people are still writing Swing apps. I was even more disappointed by the IoT talk, where he not only accused Hadoop of being insecure (speaker missed my talk, then), most of his slides seemed lifted from elsewhere: one a cisco IoT arch, one dell hadoop cluster design. Julio pointed out later that the brontobyte slide was one HP Labs have been using. Tip: if you use other's slides, either credit them or make sure the authors aren't attendees.

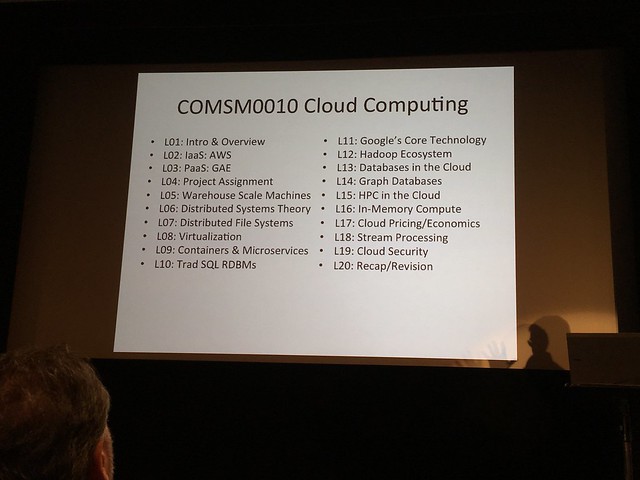

I really liked some of the other talks. There was a great keynote by a former colleague, Dave Cliff, now at Bristol Uni, talking about what they are up to. This is the lecture series on their cloud computing course.

That's a big change given that in 2010, all they had was a talk Julio and I gave in the HPC course

I might volunteer to give one of the new course's talks in exchange for being able to sit in on the other lectures (and exemption from exams, tutorials and homework, obviously)

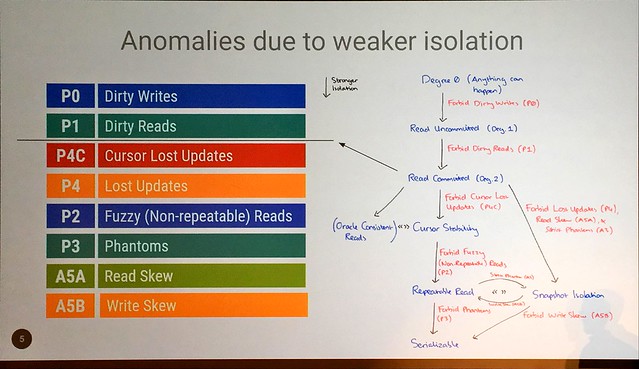

My favourite talk turned out to be Out of the Fire Swamp, by Adrian Colyer.

Adrian writes "The morning paper" blog, which is something I'm aware of, in awe of and in fear of. Why fear? There's too many papers to read; I can't get through 1/day and to track the blog would only make it clear how behind I was. I already have a directory tree full of papers I think I need to understand. Of course, if you do read a related paper/day, it probably gets easier, except I'm still trying to complete [Ulrich99], as my and relate it to modern problems.

Adrian introduced the audience to data serialization, causality and happens-before, then into linearalizability [HW90]

This was a really good talk.

All the code we write is full of assumptions. We assume that n + 1 > n, though we know in our head that if n = 2^31 and its stored in a signed int32, that doesn't hold. (more formally, in two's complement binary arithmetic, for all registers of width w, n+1 > n only holds for all n where n < 2^(w-1).

Sometimes even that fundational n+1 > n assumption catches us out. We assume that two assignments in source code happen in order, though in fact not only does the JVM reserve the right to re-order things, even, in the past, wrongly —and anyway, the CPU can reorder stuff as well.

What people aren't aware of in modern datacentre-scale computing is what assumptions the systems underneath have made in order to give their performance/consistency/availability/liveness/persistence features or whatever it is they offer. To put differently: we think we know what the systems we depend on do, but every so often we find out our assumptions were utterly wrong. What Adrian covered is the foundational CS-complete assumptions, that you had really be asking hard questions about when you review technologies.

He also closed with a section on future system trends, especially storage, with things like non-volatile DIMMS (some capacitor + SSD to do a snapshot backup on power loss), faster SSD and those persistent technologies with performance between DRAM and SSD: looking at a future where tomorrow's options about durability vs. retrieval time are going to be (at least from some price points), significantly different from today's. Which means that we'd better stop hard coding those assumptions into our systems.

Overall, a nice event, especially given it in the second closest place to my house where you could hold a conference (Bristol University would have been 7 minutes rather than 15 minutes walk). I had the pleasure of meeting some new people in the area working on stuff, including the illustrious James Strachan who'd come up from Somerset for the day.

I liked the blend of some CS "Lamport Layer" work with practical stuff; gives you both education and things you can make immediate use of. I know Martin Kleppman has been going round evangelising classic distributed computing problems to a broader audience, and Berlin Buzzwords 2016 has a talk Towards consensus on distributed consensus —it's clearly something the conferences need.

If there was a fault, as well as some of the talks not being ideal for the audience (mine), I'd say it's got the usual lack of diversity of a tech conference. You could say "well. that's the industry", but it doesn't have to be, and even if that is the case it doesn't have to be so unbalanced in the speakers. In the BBuzz even not only are there two women keynoting, we submission reviewers were renewing the talks anonymously: we didn't know who was submitting, instead going on the text alone.

For the next Bristol conference, I'd advocate going closer in Uni/industry collaboration by offering some of the students tickets. Maybe even some of the maths & physics students rather than just CS. I also think maybe there should be a full strand dedicated to CS theory. Seriously: we can do causality, formality, set theory & relational algebra, paxos+ZK+REEF, reliability theory, graphs etc. Things that apply to the systems we use, but stuff people kind of ignore. I like this idea ... I can even think of a couple of speakers. Me? I'd sit in the audience trying to keep up.

[HW90] Herlihy and Wing, Linearizability: A Correctness Condition for Concurrent Objects, 1990.

[Ulrich99] Ulrich, A.W., Zimmerer, P. and Chrobok-Diening, Test architectures for testing distributed system, 1999. Proceedings of the 12th International Software Quality Week.

No comments:

Post a Comment

Comments are usually moderated -sorry.